Universal Future-Proof Haptic System

When you create a soundtrack by mixing different sounds into the different channels for the left and right speaker, you have made use of modern stereo sound systems, but your sounds will not take advantage of 5.1 surround sound. And similarly, if you create 6 different tracks for all speakers of the 5.1 sound system, then what are you going to do when 7.1 sound systems come out?

Rather than place a sound in a specific speaker, the better approach is to place sounds in a 3D space around the user and then let the software play the sound as best it can out of whichever speakers the user has. This system for making audio content is future-proof because as additional speakers are added around and above and below, it will be able to accurately make use of all of them.

This is what we need with haptics. It has to be able to accommodate an arbitrary level of fidelity, so that games made today and in the future are playable. At the moment it is a real mess, and game devs have to hardcode in support for specific devices or it won’t work at all. To cut through all this mess, I think what you need is middleware – a universal haptic interface through which games and haptic devices communicate.

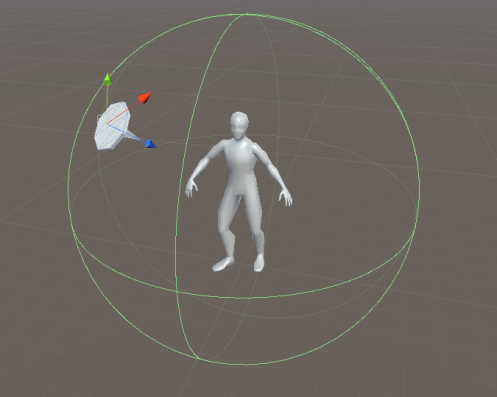

Bubble Effects:

These are general locational effects that are applied primarily to large devices that surround the player, such as windmachines, heaters and floor surfaces, but they can be translated down into local body effects such as gamepad rumble as well.

Bubble effects are specific in terms of a 3D location, direction, and magnitude around the player’s centre of mass. Think of them like sounds that you place in the 3D space to be delivered through a surround sound speaker system. So you can feed in an explosion location and magnitude, a wind and rain direction, rough terrain, slippery mud, snowstorm winds, etc. For example, the Cyberith omni-directional treadmill has a floor that can rumble.

If there are no bubble devices then the developer and the player have the option to translate those haptic outputs into body effects instead (or in addition to the bubble effects).

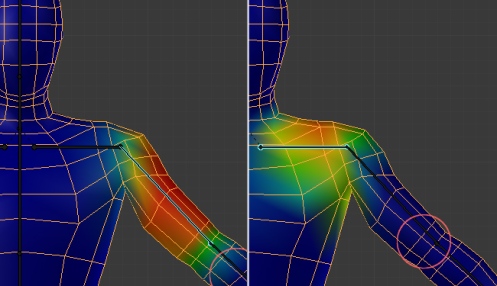

Body Effects:

These are local effects that are specified in terms of location on a generic player body. You can just specify a point location, or like when rigging a character for animation, you can paint vertex weights across the mesh to cover a whole area. When it is passed through to haptic devices, it will search for the nearest haptic device.

So an effect may occur on your elbow, but since you don’t have any haptic device there, it will automatically look up your arm and into your body, or down your arm to your hand, until it finds the nearest haptic device to use to deliver the effect. You can specify whether it should try to reach in towards the centre of mass, or out towards the limbs in its search.

You can specify whether it should prioritise location or direction. For example if a bullet comes from the right and hits your character’s left arm, but all you have on is a haptic vest, then should the vest deliver an impact on the left (where the arm was hit) or on the right (the direction the effect came from)? If you prioritise location, it will be felt on the left near the arm. If you prioritise direction, it will be felt on the right in the direction it came from.

Just as bubble effects can be translated down into body effects, body effects can be translated up into bubble effects, just in case the player lacks any kind of haptic vest but has windmachines.

Output parameters (every haptic event can include these pieces of data, and it will all be sent as a singular input to a haptic device):

- Location: All haptic effects can have a position in relation to the player’s body (for body effects) or centre of mass (for bubble effects). Or they can be global and apply to everywhere.

- Vector: With direction and magnitude, and possibly torque. If the device is incapable of representing one of these (eg a rumble motor that can only vibrate to convey magnitude), then it will only represent what it can. These force vectors can be used not only for active events like an explosion that pushes the player’s body, but also the passive resistance of objects that prevent the player from moving through them. If the haptic device is capable of limiting motion, then it would be done through vectors.

- Waveform: An audio file. High fidelity haptic devices (eg ViviTouch) can output exactly the “texture” of a waveform, but rumble motors can try their best to match their rumble speed to the waveform.

- Image / Video: Two-dimensional surface-creation devices (eg a floor of an omni-directional treadmill, or a surface under a fingertip) can take a static image or a moving video file and use the brightness of each pixel to determine the height of each section of the surface. Or you could feed in the heightmap of the terrain mesh, or any other 2D array.

Haptic domains:

- Kinetic: The usual physical forces we associate with haptic feedback.

- Thermal: Changes in temperature that can be achieved with air conditioners, heat lamps, and other such devices to create the heat from an explosion or the chill of a mountaintop.

One can imagine in the future a singular haptic module that can take in all these different forms of data and produce all these different effects. There could a module that straps on, like a PrioVR sensor, for body effects. It is also easy to imagine a surround sound system where each speaker also has built in a way of blowing hot and cold air, and maybe even creating the pulse of an explosive shockwave with ultrasonic or infrasound transducers. But for now, the system would simply send the data to whatever devices the player had, and it would do whatever it could.

What This Means

This system was designed with “the path of least resistance” in mind, with minimal extra work needed from developers, players and hardware manufacturers for haptic effects to be supported. Game events already take place in specific locations and involve physics forces, and all the developer needs to do is feed that data into the system as a haptic event, and it will make it work with whatever devices the player has – no longer do developers need to hardcode in specific support for specific devices. As long as the makers of the hardware include an interface with the system, and game devs support the system, it will work with all current and future devices, in whatever arrangement.

That’s the beauty of this system: If you make a game and then later a new device is released, as long as it interfaces with this haptic system, it will have retro-active compatibility with your game! And existing haptic devices like the Novint Falcon can be updated to include support for this haptic system, and then they will work with all games that support this haptic system. That is why this kind of system is future-proof. This is why it might be particularly relevant to OSVR.

For developers:

Even though these can accommodate very high-fidelity outputs such as waveforms and surface textures, developers don’t necessarily have to worry about making content for these – they can just re-use the assets they have already created: Namely decals, terrain textures and sound effects.

For example, the most common haptic output is taking damage. The quickest and cheapest way to get this running is simply to feed into the Haptic Vector the direction the damage came from and the amount of damage inflicted, and feed into the Haptic Waveform the sound file for the damage effect, and feed into the Haptic Surface Texture the decal of the bullet wound that would be applied to the character. This gives different and specific haptic effects for different damage types, magnitudes and directions, but even if the developer failed to feed in one or two of these things, the system would still make use of whatever it was given. If all they fed into the haptic system was damage magnitude, then it could still make use of that. But ideally game studios would one day have a haptic designer in the same way that they have a sound designer today – someone whose job it is to create specific effects for all the events in the game, and making sure all those effects are as good as possible.

But even if you don’t have the time and resources to handcraft all of these effects, then you can still just throw in damage direction, magnitude and sound effect. They may not be particularly realistic haptic effects, or as fun as possible, but they will definitely be interesting and different from each other, and that will be far superior to nothing. It’s a good short-term solution.

For Devices:

Integration with devices is as simple as giving them a default profile of locations on the bubble and / or body. For example, a gamepad’s haptic profile might simply be in the centre of mass of the player’s body, or maybe it would occupy two points – one in each hand. Either way, if it is the only haptic device you have, then it will be fed every haptic event so the result is the same. But if you also have a haptic vest, then maybe the gamepad should occupy the hands so that chest events are sent to the vest and hand events are sent to the gamepad.

Player Options and Customisation:

Allows players to

- Bind their haptic devices to different haptic outputs, just like you can bind your keyboard and mouse inputs to different game actions like spacebar to jump, left-click to shoot. You get a list of all your detected haptic devices and they show up in their default places on your body/bubble, but you can place them anywhere you want if you want to re-map them because your setup is non-standard.

- For body effects: You can place a point in space on the surface of the mesh or inside the mesh. Or like when rigging a character for animation, you can paint vertex weights across the mesh to cover a whole area.

- For bubble effects: You can specify where your output devices are in relation to your centre of mass, and which direction they are pointing, if any. By default they are assumed to be pointing at your centre of mass.

- Adjust sensitivity, and invert or mute any output

- Add an offset for the direction of axes for vectors to compensate for a difference in how your particular equipment is set up.

For this to be maximally useful, especially in terms of player customisation of sensitivities and output bindings, it would have to work like a gaming peripheral driver: You can create a general default profile that will work for all games unless you have created a different profile for the specific game that you are opening up. Like gaming peripheral drivers, it would detect the game being opened, look for a profile attached to a game of that name, and if it didn’t find any, activate the default profile.

There’s also the potential it opens up for prototyping: Anyone can get an arduino and some desk fans or some other homemade device and create an interface for it for the universal haptic system, and then play some games with haptic system compatibility to test out their prototype.